DeepSeek is popular these days. This post from Partition Magic introduces DeepSeek requirements and shows you how to deploy DeepSeek step by step. You can have a try.

What Is DeepSeek?

DeepSeek is a chatbot created by the Chinese artificial intelligence company DeepSeek. This chatbot can answer questions, solve logic problems, and write computer programs on par with other chatbots.

On 10 January 2025, DeepSeek released the chatbot, based on the DeepSeek-R1 model, for iOS and Android. By 27 January, DeepSeek-R1 had surpassed ChatGPT as the most downloaded freeware app on the iOS App Store in the United States.

DeepSeek uses significantly fewer resources compared to its peers. DeepSeek’s competitive performance at a relatively minimal cost has been recognized as potentially challenging the global dominance of American AI models. On 27 January 2025, Nvidia’s stock fell by as much as 17–18%, as did the stock of rival Broadcom.

DeepSeek Requirements

You can use the web version of DeepSeek, but you can also deploy DeepSeek locally on your PC. To do that, your PC should meet the DeepSeek requirements.

#1. DeepSeek GPU Requirements

The 7B Model needs Approximately 16 GB VRAM (FP16) or 4 GB (4-bit quantization). The 16B Mode needs around 37 GB VRAM or 9 GB. The 67B Model needs about 154 GB VRAM or 38 GB. The 236B Model requires around 543 GB VRAM or 136 GB. The 671B Model needs approximately 1,543 GB VRAM or 386 GB.

As you can see, the VRAM requirements increase with the model size. If you use smaller models like the 7B and 16B, consumer GPUs such as the NVIDIA RTX 4090 are suitable. If you use larger models, data center-grade GPUs like the NVIDIA H100 or multiple high-end consumer GPUs are recommended.

#2. DeepSeek CPU and RAM Requirements

A powerful multi-core processor like dual EPYC CPUs with substantial RAM configurations is recommended. As for the RAM, a minimum of 64 GB is advisable for running larger models efficiently.

#3. DeepSeek Storage Requirements

Depending on the model size, the needed disk space could range from tens to hundreds of gigabytes to accommodate the model files and any additional data required for processing.

MiniTool Partition Wizard DemoClick to Download100%Clean & Safe

How to Deploy DeepSeek Locally

If you need to use DeepSeek frequently and have personalized customization needs, you may want to deploy DeepSeek locally on your PC. To do that, you can refer to the following guide.

Step 1: Download and install Ollama suitable for your system. The recommended installation location is the C drive. This software is a tool for managing and running large models locally, which simplifies model downloading and scheduling operations.

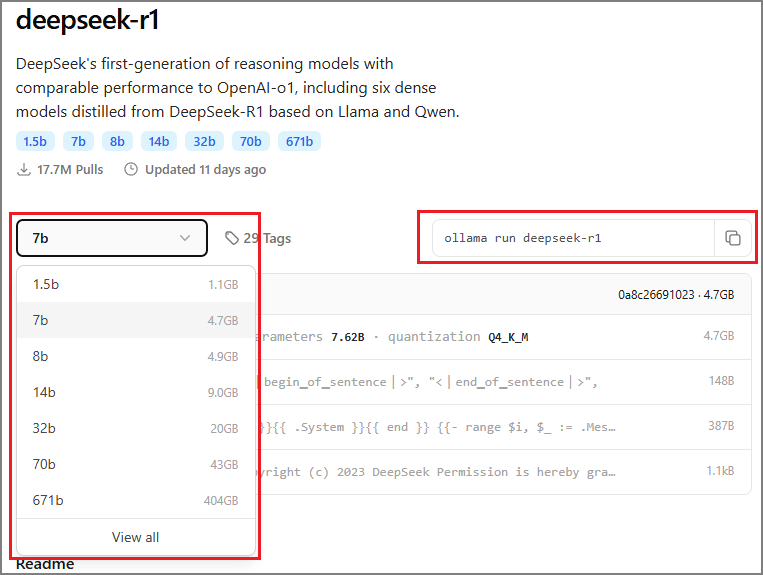

Step 2: Return to the Ollama website, click on the Models tab, and then click deepseek-r1. Select the model version. The larger the number, the more model parameters, the stronger the performance, and the higher the video memory requirement. Then, copy the command displayed on the page.

Step 3: Press the Windows logo key + R, type “cmd” in the text box, and then press Enter to open Command Prompt. Paste the command that you copied just now and press Enter. The system will automatically start downloading the model.

Step 4: After the download is complete, your computer will have an offline DeepSeek that can be used even when the network is disconnected. At this point, you can directly enter questions in the command line to start interacting with the model.

Bottom Line

If you want to deploy DeepSeek locally, your PC needs to meet the DeepSeek requirements. This post introduces them to you.

User Comments :